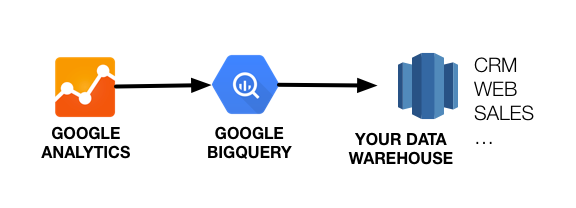

Google Analytics Premium clients have the option to export clickstream (hit-level) data into Google BigQuery through a native integration.

As I mentioned in the previous post clickstream data empowers analysts to answer much more complex (and valuable) business questions, namely by integration with other data sources (e.g. CRM).

Many technical teams are new to Google BigQuery and how to work with it. On top of that, the structure of how the Google Analytics (GA) Premium data is stored in BigQuery may not be obvious at first glance.

By following the instructions below, you’ll jump-start your knowledge and more quickly arrive at an ETL process that can bring this valuable clickstream data into your own data warehouse.

Why export Google Analytics BigQuery clickstream data?

In one word: data enrichment — but here are three specific points:

1) Business Logic Let’s say you have custom rules to determine a channel (combination of source, medium, campaign, etc) and you need to update the clickstream data with this new field. Google BigQuery doesn’t currently support UPDATE or DELETE operations. For some use cases, this can be problematic.

2) Analysis on Google Analytics BigQuery data can be a little cumbersome since data is scattered across multiple tables and is in a nested structure. While nesting is useful to express hierarchical data (reducing duplication) it can pose its own set of challenges to the average SQL user.

3) Data Integration You may have your own data warehouse already and you would like to analyze the clickstream data using your own infrastructure. Furthermore, you may want to join this data with your other data sources (CRM, Email, Social, etc) to increase context for improved business outcomes.

Below, I provide instructions on how to jump-start your journey towards exporting of clickstream data out of BigQuery and importing into your own data warehouse.

How to export Google Analytics clickstream data from BigQuery

Google Analytics Premium can provide daily exports of clickstream data into Google BigQuery and stores it using a nested JSON format (to avoid duplication and save space). Take a look at the nested schema

To export, the first step is to create a SQL query to select all hits for a given day without nesting.

One caveat though, Google Analytics clickstream data doesn’t have a column with a hit timestamp, you’ll need to create one by adding the hit time (hits.time) converted to seconds and adding the visitStartTime (visitStartTime+ hits.time/1000).

This new column is named hit_timestamp. Below is a snippet of the SQL query. You can find the complete SQL query on my GitHub.

-- Export Google Analytics BigQuery to flat clickstream data

-- Version: 1.0

-- URL: -

--

-- Authors: Joao Correia <[email protected]>

-- License: Apache License Version 2.0

SELECT

STRFTIME_UTC_USEC(SEC_TO_TIMESTAMP(visitStartTime+ hits.time/1000),"%Y-%m-%d %H:%M:%S") as hit.timestamp,

-- ROUND(visitStartTime + hits.time/1000) as hits.timestamp, /* Use for UNIX timestamp instead of timestamp */

visitNumber,

visitId,

fullVisitorId,

STRFTIME_UTC_USEC(SEC_TO_TIMESTAMP(visitStartTime),"%Y-%m-%d %H:%M:%S") as hit.visitStartTime,

LEFT(date,4)+"-"+SUBSTR(date,5,2)+"-"+RIGHT(date,2) as date,

trafficSource.referralPath,

trafficSource.campaign,

trafficSource.source,

trafficSource.medium,

trafficSource.keyword,

trafficSource.adContent,

device.browser,

device.browserVersion,

device.operatingSystem,

device.operatingSystemVersion,

device.isMobile,

device.mobileDeviceBranding, /* Only Availabe is later schemas */

device.flashVersion,

device.javaEnabled,

device.language,

device.screenColors,

device.screenResolution,

device.deviceCategory,

geoNetwork.continent,

geoNetwork.subContinent,

geoNetwork.country,

geoNetwork.region,

geoNetwork.metro

hits.type,

hits.social.socialInteractionNetwork,

hits.social.socialInteractionAction,

hits.hitNumber,

(hits.time/1000) as hits.time, /* Converted to seconds */

hits.hour,

hits.minute,

hits.isSecure,

hits.isInteraction,

hits.referer,

hits.page.pagePath,

hits.page.hostname,

hits.page.pageTitle,

hits.page.searchKeyword,

hits.page.searchCategory,

-- Ecommerce

hits.transaction.transactionId,

hits.transaction.transactionRevenue,

hits.transaction.transactionTax,

hits.transaction.transactionShipping,

hits.transaction.affiliation,

hits.transaction.currencyCode,

hits.transaction.localTransactionRevenue,

hits.transaction.localTransactionTax,

hits.transaction.localTransactionShipping,

hits.transaction.transactionCoupon,

hits.item.transactionId,

hits.item.productName,

hits.item.productCategory,

hits.item.productSku,

hits.item.itemQuantity,

hits.item.itemRevenue,

hits.item.currencyCode,

hits.item.localItemRevenue,

-- Enhanced Ecommerce

hits.eCommerceAction.action_type,

hits.eCommerceAction.step,

hits.eCommerceAction.option,

hits.product.productSKU,

hits.product.v2ProductName,

hits.product.v2ProductCategory,

hits.product.productVariant,

hits.product.productBrand,

hits.product.productRevenue,

hits.product.localProductRevenue,

hits.product.productPrice,

hits.product.localProductPrice,

hits.product.productQuantity,

hits.product.productRefundAmount,

hits.product.localProductRefundAmount,

hits.product.isImpression,

hits.refund.refundAmount,

hits.refund.localRefundAmount,

-- Promotion

hits.promotion.promoId,

hits.promotion.promoName,

hits.promotion.promoCreative,

hits.promotion.promoPosition,

-- Mobile App

hits.contentInfo.contentDescription,

hits.appInfo.name,

hits.appInfo.version,

hits.appInfo.id,

hits.appInfo.installerId,

hits.appInfo.appInstallerId,

hits.appInfo.appName,

hits.appInfo.appVersion,

hits.appInfo.appId,

hits.appInfo.screenName,

hits.appInfo.landingScreenName,

hits.appInfo.exitScreenName,

hits.appInfo.screenDepth,

hits.exceptionInfo.description,

hits.exceptionInfo.isFatal,

-- Events

hits.eventInfo.eventCategory,

hits.eventInfo.eventAction,

hits.eventInfo.eventLabel,

hits.eventInfo.eventValue,

-- Custom Dimensions (Add your custom dimensions by adding a line for each dimension)

MAX(IF (hits.customDimensions.index = 1, hits.customDimensions.value, NULL)) WITHIN RECORD AS dimension1,

MAX(IF (hits.customDimensions.index = 2, hits.customDimensions.value, NULL)) WITHIN RECORD AS dimension2,

MAX(IF (hits.customDimensions.index = 3, hits.customDimensions.value, NULL)) WITHIN RECORD AS dimension3,

-- Custom Metrics (Add your custom metrics by adding a line for each metric)

MAX(IF (hits.customMetrics.index = 1, hits.customMetrics.value, NULL)) WITHIN RECORD AS metric1,

MAX(IF (hits.customMetrics.index = 2, hits.customMetrics.value, NULL)) WITHIN RECORD AS metric2,

MAX(IF (hits.customMetrics.index = 3, hits.customMetrics.value, NULL)) WITHIN RECORD AS metric3,

-- Custom Variables (Use only the ones you need)

MAX(IF (hits.customVariables.index = 1, hits.customVariables.customVarName, NULL)) WITHIN RECORD AS cv1Key,

MAX(IF (hits.customVariables.index = 1, hits.customVariables.customVarValue, NULL)) WITHIN RECORD AS cv1Value,

FROM [dataset_id.ga_sessions_YYYYMMDD]Step-by-Step Instructions

-

Go to the Google Analytics BigQuery project and create a new dataset (in my example, I’ll call this ETL). This dataset will hold your clickstream data exports.

-

Grab the SQL query.

-

Edit the SQL query to tailor your Google Analytics implementation (customize the fields for dimensions, enhanced ecommerce, etc.) Don’t add more dimensions/metrics than the ones your current implementation is using.

-

Paste your query into the query window.

-

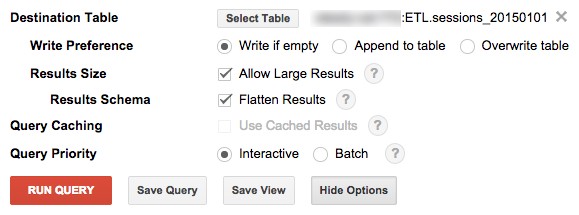

Select ‘Show Option’ and configure a destination table (in my example sessions_20150101). Select the dataset you created in ‘step 1′ and name the table appropriately. While you are testing you can add a ‘LIMIT’ to the SQL query to limit the number of returned rows.

-

Once the query is finished, you will have your flattened clickstream data in the new table under the new dataset. You can append other tables to this table for analysis or export it to a CSV for storage or analysis in another platform.

Exporting Google Analytics Clickstream Data to Your Own Data Warehouse

-

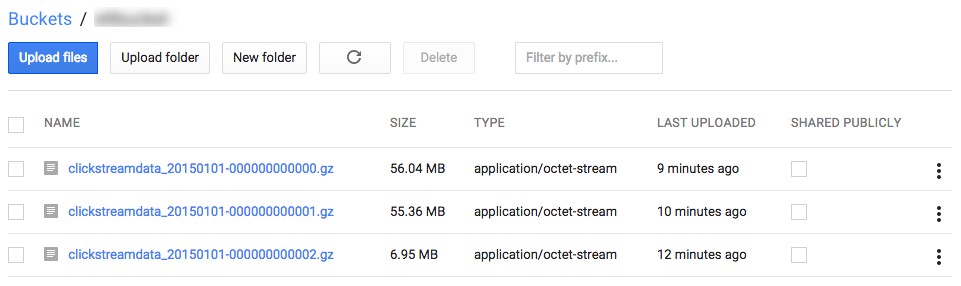

Create a bucket in Google Storage under the same project.

-

Export the table to Google Storage and download it for analysis or load into your data warehouse. The file pattern will divide the file into multiples and compress them using gzip. This is handy for analysis with Hive (Hadoop). The format itself is a CSV.

The exported data is now in Google Storage:

You can now create an ETL job to automate any of the processes described above to keep your data warehouse data up-to-date.